A faulty software update issued by cybersecurity firm CrowdStrike caused a global tech outage on July 19. The outage, which affected computers running Microsoft Windows worldwide, grounded flights, knocked media outlets offline, and disrupted hospitals, banks, small businesses and government offices.

CrowdStrike said the outage was not the result of hacking or a cyberattack, adding a fix was on the way. But hours later, the disruptions continued.

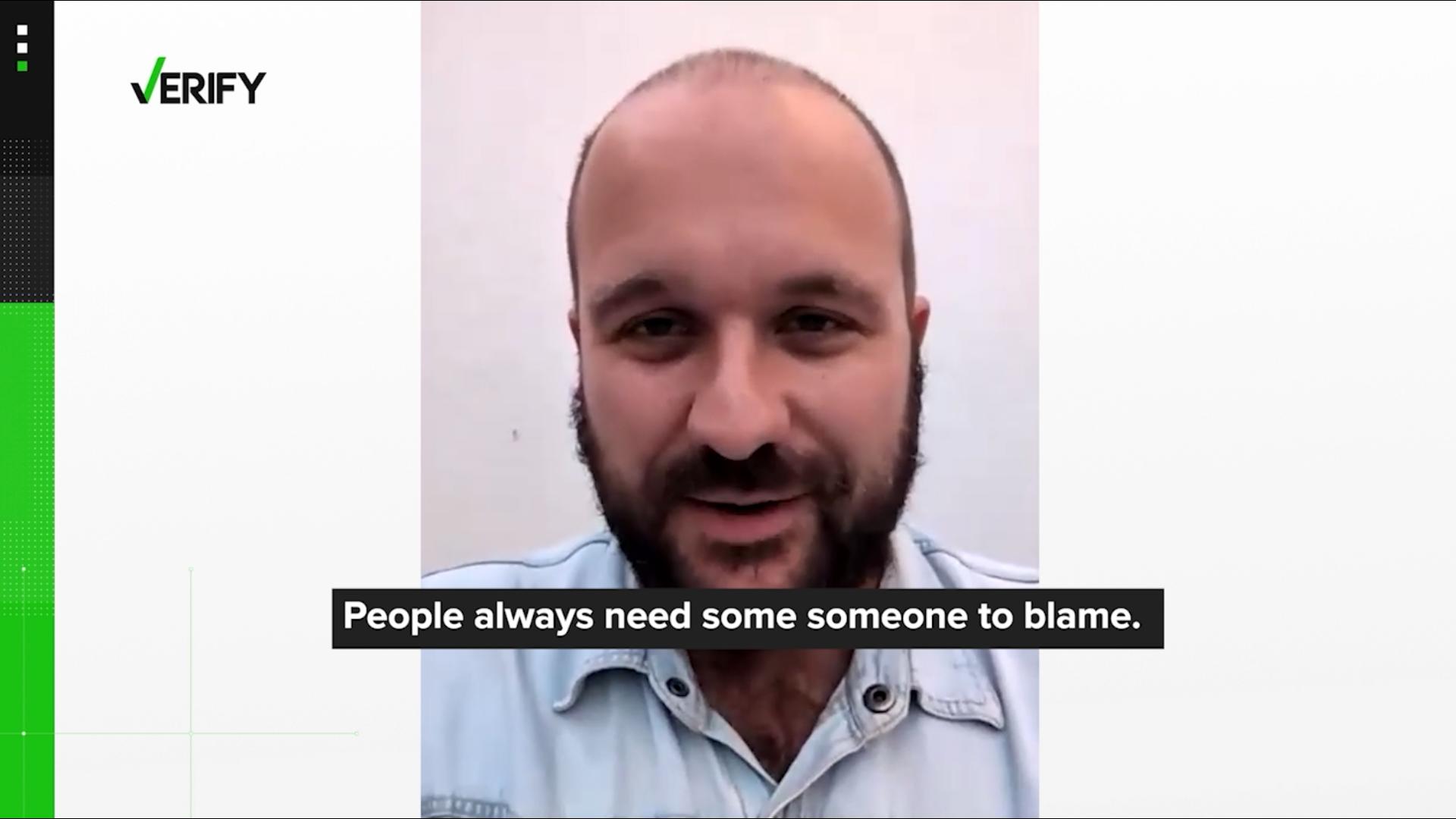

Just a few hours after the outage began, a man claiming to be responsible for the software update posted a photo of himself at what appeared to be the CrowdStrike offices. In a follow-up video, the man also shared his version of what he says caused the outage. The photo and video posts have garnered over 24 million views in less than 24 hours.

“First day at Crowdstrike, pushed a little update and taking the afternoon off ✌️,” the man wrote.

Some people who commented on the viral posts questioned whether the man’s claims were real.

THE QUESTION

Is a photo of a man claiming to take responsibility for the CrowdStrike outage real?

THE SOURCES

- Vincent Flibustier, a media education trainer who specializes in fake news and social networks

- Social media posts on X and Facebook by Vincent Flibustier

- Google Translate

- EzDubs, AI video dubbing tool

THE ANSWER

No, a photo of a man claiming to take responsibility for the CrowdStrike outage is not real.

WHAT WE FOUND

The photo of a man claiming to take responsibility for the CrowdStrike outage is fake, according to Vincent Flibustier, the man who appeared in and created the now-viral photo and video. Flibustier confirmed the photo and video he shared of himself were fake on his X and Facebook pages on July 19.

“I made the fingers with generative AI and... It looks like I have dwarf hands 😂,” he said in an X post about the viral photo that appears to show him putting up a peace sign at the CrowdStrike offices.

Flibustier said he used Adobe Photoshop and the software program’s generative AI feature to edit himself and the “dwarf” hand into the viral photo. He also said he used another AI tool to translate his voice from French to English in the viral follow-up video.

Later, in another X post, Flibustier shared details on what he believes made people fall for the fake viral posts, which VERIFY translated from French to English using Google Translate:

“Several things that make it a good fake that worked: 👇

1. No culprit named yet, I bring it on a platter, people like to have a culprit.

2- The culprit seems completely stupid, he is proud of his stupidity, he... takes his afternoon off on... the first day of work...

3- This falls right into a huge buzz in which people absolutely need to have new information, and a fake is by nature new, you won't read anything else.

4- In English = very easy to share internationally, with the vast majority of people who have no idea who I am.

5- Dwarf fingers are stupid, but they distract people from other things (like the fact that I have a horn on my head because of bad clipping)

6- Confirmation bias: People want to believe it, it's so funny. “I like the information, so it is true,” Flibustier wrote.

Flibustier is a media education trainer who specializes in fake news and social networks, according to his official website. He also runs Nordpresse, a Belgian parody news site.

“Since 2016, I have been giving conferences/presentations/training sessions on fake news, verifying information on the internet, web scams, etc. The subject has fascinated me since the creation of the parody site Nordpresse in 2014,” Flibustier says on his website.

The Associated Press contributed to this report.